TL;DR: Lazy deserialization was recently enabled by default in V8 v6.4, reducing V8’s memory consumption by over 500 KB per browser tab on average. Read on to find out more!

Introducing V8 snapshots #

But first, let’s take a step back and have a look at how V8 uses heap snapshots to speed up creation of new Isolates (which roughly correspond to a browser tab in Chrome). My colleague Yang Guo gave a good introduction on that front in his article on custom startup snapshots:

The JavaScript specification includes a lot of built-in functionality, from math functions to a full-featured regular expression engine. Every newly-created V8 context has these functions available from the start. For this to work, the global object (for example, the window object in a browser) and all the built-in functionality must be set up and initialized into V8’s heap at the time the context is created. It takes quite some time to do this from scratch.

Fortunately, V8 uses a shortcut to speed things up: just like thawing a frozen pizza for a quick dinner, we deserialize a previously-prepared snapshot directly into the heap to get an initialized context. On a regular desktop computer, this can bring the time to create a context from 40 ms down to less than 2 ms. On an average mobile phone, this could mean a difference between 270 ms and 10 ms.

To recap: snapshots are critical for startup performance, and they are deserialized to create the initial state of V8’s heap for each Isolate. The size of the snapshot thus determines the minimum size of the V8 heap, and larger snapshots translate directly into higher memory consumption for each Isolate.

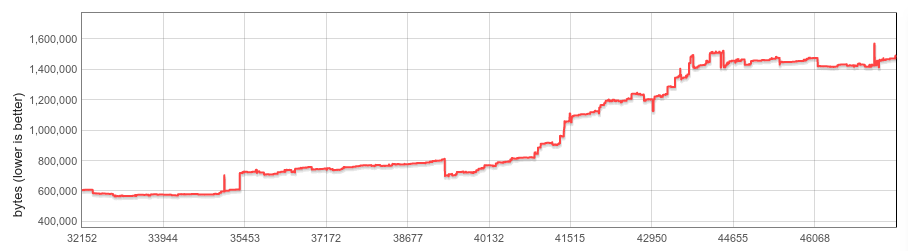

A snapshot contains everything needed to fully initialize a new Isolate, including language constants (e.g., the undefined value), internal bytecode handlers used by the interpreter, built-in objects (e.g., String), and the functions installed on built-in objects (e.g., String.prototype.replace) together with their executable Code objects. Startup snapshot size in bytes from 2016-01 to 2017-09. The x-axis shows V8 revision numbers.

Startup snapshot size in bytes from 2016-01 to 2017-09. The x-axis shows V8 revision numbers.

Over the past two years, the snapshot has nearly tripled in size, going from roughly 600 KB in early 2016 to over 1500 KB today. The vast majority of this increase comes from serialized Code objects, which have both increased in count (e.g., through recent additions to the JavaScript language as the language specification evolves and grows); and in size (built-ins generated by the new CodeStubAssembler pipeline ship as native code vs. the more compact bytecode or minimized JS formats).

This is bad news, since we’d like to keep memory consumption as low as possible.

Lazy deserialization #

One of the major pain points was that we used to copy the entire content of the snapshot into each Isolate. Doing so was especially wasteful for built-in functions, which were all loaded unconditionally but may never have ended up being used.

This is where lazy deserialization comes in. The concept is quite simple: what if we were to only deserialize built-in functions just before they were called?

A quick investigation of some of the most popular websites showed this approach to be quite attractive: on average, only 30% of all built-in functions were used, with some sites only using 16%. This looked remarkably promising, given that most of these sites are heavy JS users and these numbers can thus be seen as a (fuzzy) lower bound of potential memory savings for the web in general.

As we began working on this direction, it turned out that lazy deserialization integrated very well with V8’s architecture and there were only a few, mostly non-invasive design changes necessary to get up and running:

- Well-known positions within the snapshot. Prior to lazy deserialization, the order of objects within the serialized snapshot was irrelevant since we’d only ever deserialize the entire heap at once. Lazy deserialization must be able to deserialize any given built-in function on its own, and therefore has to know where it is located within the snapshot.

- Deserialization of single objects. V8’s snapshots were initially designed for full heap deserialization, and bolting on support for single-object deserialization required dealing with a few quirks such as non-contiguous snapshot layout (serialized data for one object could be interspersed with data for other objects) and so-called backreferences (which can directly reference objects previously deserialized within the current run).

- The lazy deserialization mechanism itself. At runtime, the lazy deserialization handler must be able to a) determine which code object to deserialize, b) perform the actual deserialization, and c) attach the serialized code object to all relevant functions.

Our solution to the first two points was to add a new dedicated built-ins area to the snapshot, which may only contain serialized code objects. Serialization occurs in a well-defined order and the starting offset of each Code object is kept in a dedicated section within the built-ins snapshot area. Both back-references and interspersed object data are disallowed.

Lazy built-in deserialization is handled by the aptly named DeserializeLazy built-in, which is installed on all lazy built-in functions at deserialization time. When called at runtime, it deserializes the relevant Code object and finally installs it on both the JSFunction (representing the function object) and the SharedFunctionInfo (shared between functions created from the same function literal). Each built-in function is deserialized at most once.

In addition to built-in functions, we have also implemented lazy deserialization for bytecode handlers. Bytecode handlers are code objects that contain the logic to execute each bytecode within V8’s Ignition interpreter. Unlike built-ins, they neither have an attached JSFunction nor a SharedFunctionInfo. Instead, their code objects are stored directly in the dispatch table into which the interpreter indexes when dispatching to the next bytecode handler. Lazy deserialization is similar as to built-ins: the DeserializeLazy handler determines which handler to deserialize by inspecting the bytecode array, deserializes the code object, and finally stores the deserialized handler in the dispatch table. Again, each handler is deserialized at most once.

Results #

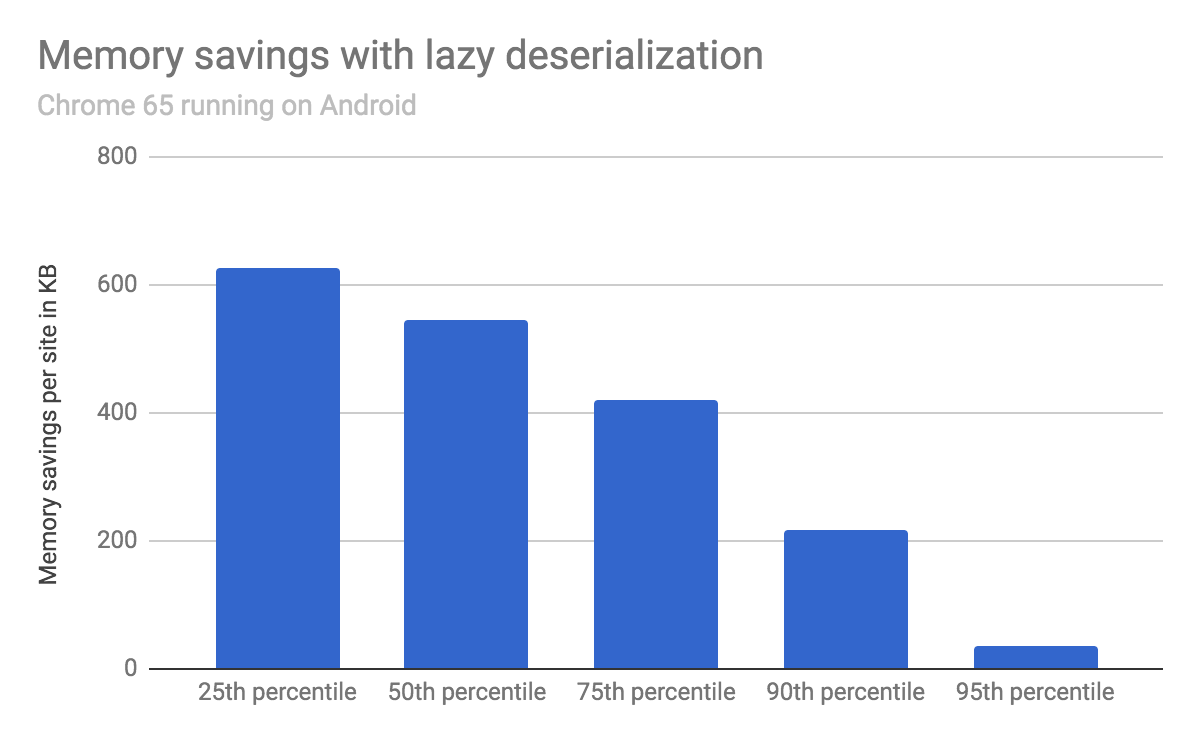

We evaluated memory savings by loading the top 1000 most popular websites using Chrome 65 on an Android device, with and without lazy deserialization.

On average, V8’s heap size decreased by 540 KB, with 25% of the tested sites saving more than 620 KB, 50% saving more than 540 KB, and 75% saving more than 420 KB.

Runtime performance (measured on standard JS benchmarks such as Speedometer, as well as a wide selection of popular websites) has remained unaffected by lazy deserialization.

Next steps #

Lazy deserialization ensures that each Isolate only loads the built-in code objects that are actually used. That is already a big win, but we believe it is possible to go one step further and reduce the (built-in-related) cost of each Isolate to effectively zero.

We hope to bring you updates on this front later this year. Stay tuned!